My Client recently complained that his linked server was

failing to connect with the following error,

“An error Occurred during Service Key decryption

There is no remote user ‘xxxxx’ mapped to local user ‘(null)’

from the remote server ‘xxxxx’.

(Microsoft SQL Server, Error : 33094)”

On checking the user permissions were right and the remote

login in which the Log server was configured had right permissions to access to

the Linked server.

When I did some googling I stumbled across, https://technet.microsoft.com/en-us/library/ms187788.aspx

“To

change the SQL Server service account, use SQL Server Configuration Manager. To

manage a change of the service account, SQL Server stores a redundant copy of

the service master key protected by the machine account that has the necessary

permissions granted to the SQL Server service group. If the computer is

rebuilt, the same domain user that was previously used by the service account

can recover the service master key. This does not work with local accounts or

the Local System, Local Service, or Network Service accounts. When you are

moving SQL Server to another computer, migrate the service master key by using

backup and restore.

The REGENERATE phrase regenerates the service master key. When

the service master key is regenerated, SQL Server decrypts all the keys that

have been encrypted with it, and then encrypts them with the new service master

key. This is a resource-intensive operation. You should schedule this operation

during a period of low demand, unless the key has been compromised. If any one

of the decryptions fail, the whole statement fails.

The FORCE option causes the key regeneration process to continue

even if the process cannot retrieve the current master key, or cannot decrypt

all the private keys that are encrypted with it. Use FORCE only if regeneration

fails and you cannot restore the service master key by using the RESTORE SERVICE MASTER KEY statement.”

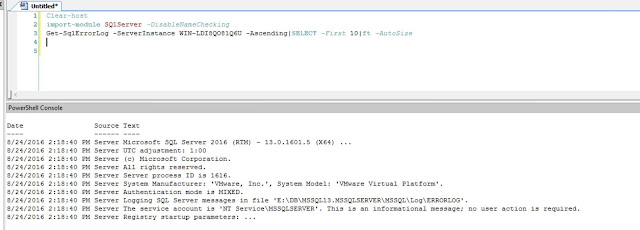

This is exactly what happened with my client, the SQL was

running under local account and the server was migrated.

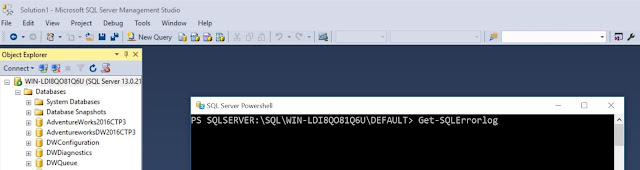

Once

I ran ALTER SERVICE

MASTER KEY FORCE REGENERATE the linked servers started

working gain.